The assessment for the ODI’s Data Ethics Facilitators course is to deliver a 30 minute live facilitated data ethics session.

Many of the participants originally claim that it is not possible, so we get them to experience an example as well as watching recordings of some previous sessions. Here is my session with the accompanying and completed Google Jamboard:

| Time | Activity |

| 1 min | Scenario: You are the brand new data ethics committee for the company Yangzte. As you all know, Yangtze is one of the largest tech companies in Europe. You’ve tasked me – an external data ethics professional – to help you decide if the first proposal before the committee gets approved or not. Has anyone sat on any ethics committees or boards before? |

| 2 mins | Here is the proposal: As one of the largest tech companies in Europe, Yangtze gets hundreds of thousands of job applications per week. Doing it manually is a huge job that wastes lots of time and money. The engineering team has been tasked with designing an algorithm to identify the top five CVs for each role. The algorithm will be trained using CVs from successful candidates over the last 7 years to learn what a “good” CV looks like. Identifying information such as name, date of birth, gender or photographs will be removed before the CV is given to the algorithm. |

| 15 mins | Facilitate a discussion around the key data ethics points. (Individual thinking time – 3 mins). What are the potential positive outcomes of this proposal? What potential risks are there? How can we mitigate the risks and maximise the positive outcomes? Some ideas: Positives Saving time and money If the algorithm is trained well, we can get higher quality CVs through What does well trained mean? Risks: The data is historical data, how can we ensure there isn’t bias? Bias training for engineers Involve HR and their processes Ensure that ⅓ of the data set is left for training data Run the process in tandem with the old system and check for disparities. Put an acceptable differentiation in place before you start. Proxy variables? E.g Language and location of school/university is a decent indication of nationality. Damage to reputation Be transparent about how we are using it. |

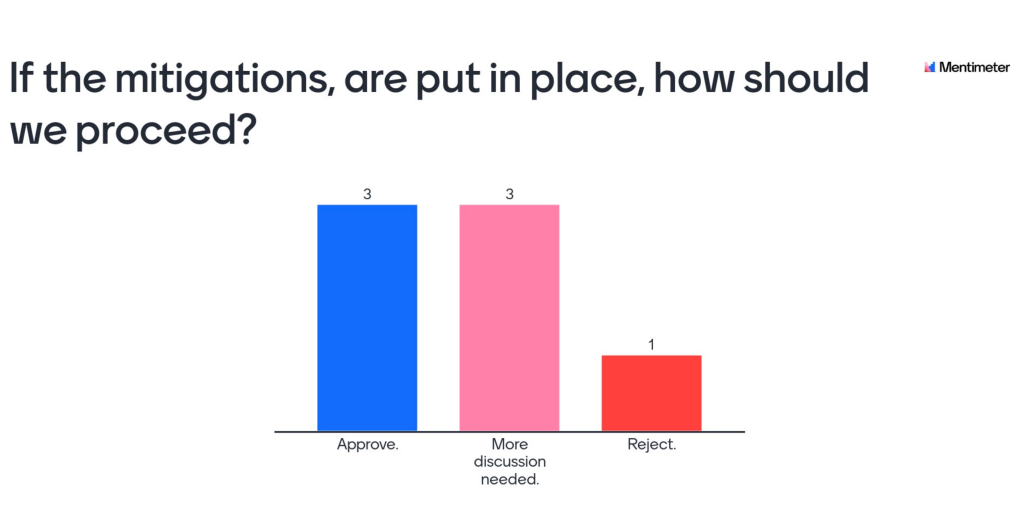

| 10 mins | Overall decision on the proposal (if the mitigations that have been suggested are put in place): proceed/more discussions/reject? Prompt questions depending on results: Is anyone still unhappy? What still needs to be discussed? Is there any way this proposal will proceed? |

What I learnt from the session

I ran the session twice, with 7-9 participants each time. Interestingly, one was incredibly successful and the other less so. I don’t think I did anything different either time, the only variable being the participants. This potentially raises an ethical question around the assessment and should be considered when marking.

I also reiterated to myself the importance of silence. I’ve marked roughly 30 of these already and it’s very obvious that the better ones leave space for participants to contribute. Also simplicity. I decided to only use a jamboard (no slides) and rely on the power of discussion. I think that

Using Mentimeter works well. I checked out Mentimeter’s accessibility beforehand, including their Voluntary Product Accessibility Template (VPAT). Participants like having a definitive ending to the session.